Chapter 5 | Initial-Value Problems for Ordinary Differential Equations¶

Initial-Value Problem (IVP)

Basic IVP (one variable first order)

Approximate the solution \(y(t)\) to a problem of the form

subject to an initial condition

Higher-Order Systems of First-Order IVP

Moreover, adding the number of unknowns, it becomes approximating \(y_1(t), \dots y_m(t)\) to a problem of the form

for \(t \in [a, b]\), subject to the initial conditions

Higher-Order IVP

Or adding the number of order, it becomes mth-order IVP of the form

for \(t \in [a, b]\), subject to the inital conditions

General Idea¶

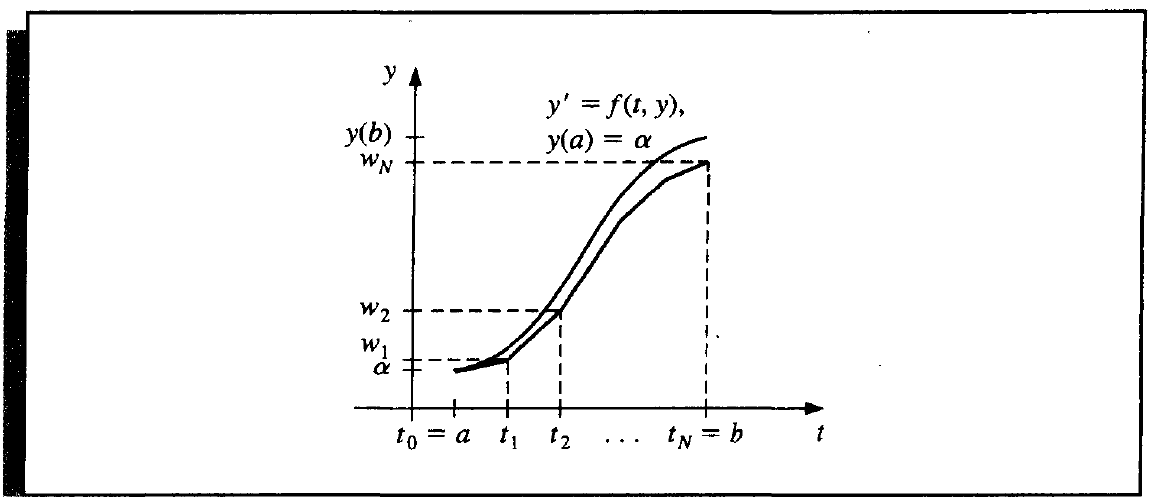

Of all the method we introduce below, we can only approximate some points, but not the whole function \(y(t)\). The approximation points are called mesh points. Moreover, we will only approximate the equally spaced points. Suppose we apporximate the values at \(N\) points on \([a, b]\), then the mesh points are

where \(h = (b - a) / N\) is the step size. To get the value between mesh points, we can use interpolation method. Since we know the derivative value \(f(t, y)\) at the mesh point, it's nice to use Hermit interpolation or cubic spline interpolation.

Availability and Uniqueness¶

Before finding out the approximation, we need some conditions to guarantee its availability and uniqueness.

Definition 5.0

\(f(t, y)\) is said to satisfy a Lipschitz condition in the variable \(y\) on a set \(D \in \mathbb{R}^2\) if \(\exists\ L > 0\), s.t.

\(L\) is called a Lipschitz constant for \(f\).

Definition 5.1

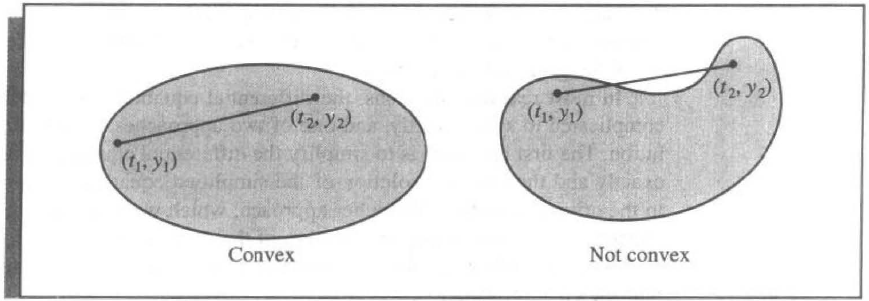

A set \(D \in \mathbb{R}^2\) is said to be convex if

Theorem 5.0 | Sufficient Condition

\(f(t, y)\) is defined on a convex set \(D \in \mathbb{R}^2\). If \(\exists\ L > 0\) s.t.

then \(f\) satisfies a Lipschitz condition on \(D\) in the variable \(y\) with Lipschitz constatnt \(L\).

Theorem 5.1 | Unique Solution

\(f(t, y)\) is continuous on \(D = \{(t, y) | a \le t \le b, -\infty < y < \infty\}\)(\(D\) is convex). If \(f\) satisfies a Lipschitz condition on \(D\) in the variable \(y\), then the IVP

has a unique solution \(y(t)\) for \(a \le t \le b\).

Definition 5.2

The IVP

is said to be a well-posed problem if

- A unique solution \(y(t)\) exists;

- \(\forall\ \varepsilon > 0, \exists\ k(\varepsilon) > 0\), s.t. \(\forall |\varepsilon_0| < \varepsilon\), and \(|\delta(t)|\) is continuous with \(|\delta(t)| < \varepsilon\) on \([a, b]\), a unique solution \(z(t)\) to the IVP

exists with

Numerical methods will always be concerned with solving a perturbed problem since roundoff error is unavoidable. Thus we want the problem to be well-posed, which means the perturbation will not affect the result approxiamtion a lot.

Theorem 5.2

\(f(t, y)\) is continuous on \(D = \{(t, y) | a \le t \le b, -\infty < y < \infty\}\)(\(D\) is convex). If \(f\) satisfies a Lipschitz condition on \(D\) in the variable \(y\), then the IVP

is well-posed.

Besides, we also need to discuss the sufficient condition of mth-order system of first-order IVP.

Similarly, we have the definition of Lipschitz condition and it's sufficient for the uniqueness of the solution.

Definition 5.3

\(f(t, y_1, \dots, y_m)\) define on the convex set

is said to satisfy Lipschitz condition on \(D\) in the variables \(y_1, \dots, y_m\) if \(\exists\ L > 0\), s.t

Theorem 5.3

if \(f(t, y_1, \dots, y_m)\) satisfy Lipschitz condition, then the mth order systems of first-order IVP has a unique solution \(y_1(t), \dots, y_n(t)\).

Euler's Method¶

To measure the error of Taylor methods (Euler's method is Taylor's method of order 1), we first give the definition of local truncation error, which somehow measure the truncation error at a specified step.

Definition 5.4

The difference method

has the local truncation error

Forward / Explicit Euler's Method¶

We use Taylor's Theorem to derive Euler's method. Suppose \(y(t)\) is the unique solution of IVP, for each mesh points, we have

and since \(y'(t_i) = f(t_i, y(t_i))\), we have

If \(w_i \approx y(t_i)\) and we delete the remainder term, we get the (Explicit) Euler's method.

(Explicit) Euler's Method

The local truncation error of Euler's Method is

Theorem 5.4

The error bound of Euler's method is

where \(M\) is the upper bound of \(|y''(t)|\).

Although it has an exponential term (which is not such an accurate error bound), the most important intuitive is that the error bound is proportional to \(h\).

If we consider the roundoff error, we have the following theorem.

Theorem 5.5

Suppose there exists roundoff error and \(u_i = w_i + \delta_i\), \(i = 0, 1, \dots\), then

where \(\delta\) is the upper bound of \(\delta_i\).

This comes to a different case, we can't make \(h\) too small, and the optimal choice of \(h\) is

Backward/ Implicit Euler's Method¶

Inversely, if we use Taylor's Theorem in the following way,

Thus we get the Implicit Euler's Method,

Implicit Euler's Method

Since \(w_{i + 1}\) is on the both side, we may use some methods in Chapter 2 to solve out the equation.

The local truncation error of implicit Euler's method is

Trapezoidal Method¶

Notice the local truncation errors of two Euler's method are

If we combine these two, we then obtain a method of \(O(h^2)\) local truncation error, which is called Trapezoidal Method.

Trapezoidal Method

Higher-Order Taylor Method¶

In Euler's methods, we only employ first order Taylor polynomials. If we expand it more to nth Taylor polynomials, we have

and since \(y^{(k)}(t) = f^{(k - 1)}(t, y(t))\), we have

Taylor Method of order n

where

Theorem 5.6

The local truncation error of Taylor method of order \(n\) is \(O(h^n)\).

Runge-Kutta Method¶

Although Taylor method gives more accuracy as \(n\) increase, but it's not an easy job to calculate nth derivative of \(f\). Here we introduce a new method called Runge-Kutta Method, which has low local truncation error and doesn't need to compute derivatives. It's based on Taylor's Theorem in two variables.

Theorem 5.7 | Taylor's Theorem in two variables (Recap)

\(f(x, y)\) has continous \(n + 1\) partial derivatives on the neigbourhood of \(A(x_0, y_0)\), denoted by \(U(A)\), then \(\forall\ (x, y) \in U(A)\), denote \(\Delta x = x - x_0\), \(\Delta y = y - y_0\), \(\exists\ \theta \in (0, 1)\), s.t.

where

and

Derivation of Midpoint Method

To equate

with

except for the last residual term \(R_1\), we have

Thus

Since the term \(R_1\) is only concerned with the 2nd-order partial derivatives of \(f\), if they are bounded, then \(R_1\) is \(O(h^2)\), the order of the local truncation error. Replace \(T^{(2)}(t,y)\) by the formula above in the Taylor method of order 2, we have the Midpoint Method.

Midpoint Method

Derivation of Modified Euler Method and Heun's Method

Similarly, approximate

with

But it doesn't have sufficient equation to determine the 4 unknowns \(a_1, a_2, \alpha_2, \delta_2\) above. Instead we have

Thus a number of \(O(h^2)\) methods can be derived, and they are generally in the form

The most important are the following two.

Modified Euler Method

Heun's Method

In a similar manner, approximate \(T^{(n)}(t, y)\) with

where

with different \(m\), we can derive quite a lot of Runge-Kutta methods.

The most common Runge-Kutta method is given below.

Runge-Kutta Order Four

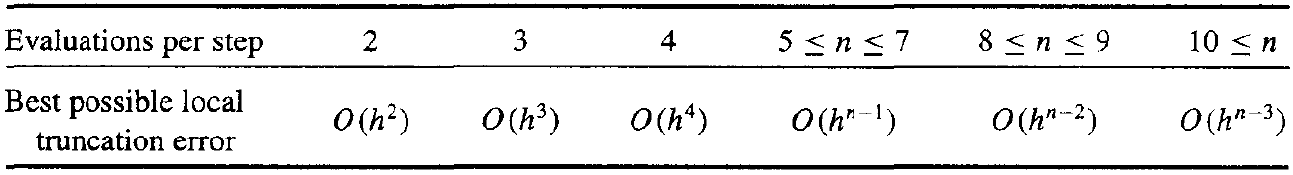

The local trancation error of Runge-Kutta are given below, where \(n\) is the number of evaluations per step.

Property

Compared to Taylor's method, evaluate \(f(t, y)\) the same times, Runge-Kutta gives the lowest error.

Multistep Method¶

In the previous section, the difference equation only relates \(w_{i + 1}\) with \(w_{i}\). Multistep Method discuss the situation of relating more term of previous predicted \(w\).

Definition 5.5

An m-step multistep method for solving the IVP

has a difference equation

with the starting values

If \(b_m = 0\), it's called explicit, or open. Otherwise, it's called implicit, or closed.

Similarly, we first define local truncation error for multistep method to measure the error at a specified step.

Definition 5.6

For a m-step multistep method, the local truncation error at \((i + 1)\)th step is

Adams Method¶

In this section, we only consider the case of \(a_{m - 1} = 1\) and \(a_i = 0\), \(i \ne m - 1\).

To derive the Adams method, we start from a simple formula.

i.e.

We cannot integrate \(f(t, y(t))\) without knowing \(y(t)\). Instead we use an interpolating polynomial \(P(t)\) by points \((t_0, w_0),\ \dots,\ (t_i, w_i)\) to approximate \(f(t, y)\). Thus we approximate \(y(t_{i + 1})\) by

For convenience, we use Newton backward-difference formula to represent \(P(t)\).

Adams-Bashforth m-Step Explicit Method¶

To derive an Adam-Bashforth explicit m-step technique, we form the interpolating polynomial \(P_{m - 1}(t)\) by

Then suppose \(t = t_i + sh\), with \(dt = hds\), we have

Since for a given \(k\), we have

| \(k\) | \(0\) | \(1\) | \(2\) | \(3\) | \(4\) |

|---|---|---|---|---|---|

| \((-1)^k\int_0^1 \binom{-s}{k}ds\) | \(1\) | \(\frac12\) | \(\frac{5}{12}\) | \(\frac38\) | \(\frac{251}{720}\) |

Thus

Adams-Bashforth m-Step Explicit Method

with local truncation error

Adams-Bashforth Two-Step Explicit Method

with local truncation error

Adams-Bashforth Three-Step Explicit Method

with local truncation error

Adams-Bashforth Four-Step Explicit Method

with local truncation error

Adams-Moulton m-Step Implicit Method¶

For implicit case, we add \((t_{i + 1}, f(t_{i + 1}, y(t_{i + 1})))\) as one more interpolating node to construct the interpolating polynomials. Let \(t = t_{i + 1} + sh\) and there is \(m\) points. Similarly we have the conclusions below.

Adams-Moulton m-Step Implicit Method

with local truncation error

Adams-Moulton Two-Step Implicit Method

with local truncation error

Adams-Moulton Three-Step Implicit Method

with local truncation error

Adams-Moulton Four-Step Implicit Method

with local truncation error

Note

Comparing an m-step Adams-Bashforth explicit method and (m - 1)-step Adams-Moulton implicit method, they both involve m evaluation of \(f\) per step, and both have the local truncation error of the term \(ky^{(m + 1)}(\mu_i)h^m\). And the latter implicit method has the smaller coefficient \(k\). This leads to greater stability and smaller roundoff errors for implicit methods.

Predictor-Corrector Method¶

Implicit method has some advantages but it's hard to calculate. We could use some iterative methods introduced in Chapter 2 to solve it but it complicates the process considerably.

In practice, since explicit method is easy to calculate, we combine the explicit and implicit method to predictor-corrector method.

Predictor-Corrector Method

Step.1 Compute first \(m\) initial values by Runge-Kutta method.

Step.2 Predict by Adams-Bashforth explicit method.

Step.3 Correct by Adams-Moulton implicit method.

NOTE: All the formulae used in the three steps have the same order of truncation error.

Example

Take \(m = 4\) which is the most common case as exmaple.

Step.1

From initial value \(w_0 = \alpha\), we use Runge-Kutta method of order four to derive \(w_1\), \(w_2\) and \(w_3\). Set \(i = 3\).

Step.2

Use four-step Adams-Bashforth explicit method, we have

Step.3

Use three-step Adams-Moulton

Then we have two options

- \(i = i + 1\), and go back to Step. 2, to approximate the next point.

- Or, for higher accuracy, we can repeat Step.3 iteratively by

Other Methods¶

If we derive the multistep method by Taylor expansion, we can have more methods. We take an example to show it.

Suppose we want to derive a difference equation in the form

We use \(y_i\) and \(f_i\) to denote \(y(t_i)\) and \(f(t_i, y_i)\) respectively.

Expand \(y_{i - 1}, f_{i + 1}, f_{i}, f_{i - 1}\), we have

and note that

Equate them with

We can solve out

Thus

Simpson Implicit Method

with local truncation error

Note that it's an implicit method, and the most commonly used corresponding predictor is

Milne's Method

with local truncation error

which can also derive by Taylor expansion in the similar manner.

Higher-Order Systems of First-Order IVP¶

Actually it just vectorize the method that we've mentioned above. Take Runge-Kutta Order Four as example.

Runge-Kutta Method for Systems of Differential Equations

Higher-Order IVP¶

We can deal with higher-order IVP the same as higher-order systems of first-order IVP. The only transformation we need to do is to let \(u_1(t) = y(t)\), \(u_2(t) = y'(t)\), \(\dots\), and \(u_m(t) = y^{(m - 1)}(t)\).

This produces the first-order system

with initial conditions

Stability¶

Definition 5.7

A one-step difference-equation method with local truncation error \(\tau_i(h)\) is consistent with the differential equation it approximates if

For m-step multistep methods, it's also required that

since at most cases, these \(\alpha_i\) are derived by one-step methods.

Definition 5.8

A one-step / multistep difference-equation method is convergent w.r.t the differential equation it approximates if

Definition 5.9

A stable method is one whose results depent continously on the initial data, in the sense that small changes or perturbations in the intial conditions produce correspondingly small changes in the subsequent approximations.

One Step Method¶

It's relatively natural to think the stability is somewhat analogous to the discussion of well-posed, and thus it's not surprising Lipschitz condition appears. The following theorem gives the relation among consistency, convergence and stability.

Theorem 5.8

Suppose the IVP is approximated by a one-step method in the form

If \(\exists\ h_0 > 0\) and $ \phi(t, w, h)$ is continuous and satisfies a Lipschitz condition in the varaible \(w\) with Lipschitz constant \(L\) on the set

Then

- The method is stable.

- The difference method is convergent iff it's consistent, which is equivalent to

- (Relation between Global Error and Local Truncation Error) If \(\exists \tau(h)\) s.t. \(|\tau_i(h)| \le \tau(h)\) whenever \(0 \le h \le h_0\), then

Multistep Method¶

Theorem 5.9 | Relation between Global Error and Local Truncation Error

Suppose the IVP is approximated by a predictor-corrector method with an m-step Adams-Bashforth predictor equation

with local truncation error \(\tau_{i + 1}(h)\), and an (m - 1)-step Adams-Moulton corrector eqation

with local truncation error \(\tilde \tau_{i + 1}(h)\). In addition, suppose \(f(t, y)\) and \(f_y(t, y)\) are continous on \(D = \{(t, y) | a \le t \le b, -\infty < y < \infty\}\) and \(f_y\) is bounded. Then the local truncation error \(\sigma_{i + 1}(h)\) of the predictor-corrector method is

Moreover, \(\exists\ k_1, k_2\), s.t.

where \(\sigma(h) = \max\limits_{m \le j \le N}|\sigma_j(h)|\).

For the difference equation of multistep method, we first introduce characteristc polynomial associated with the method, given by

Definition 5.10

Suppose the roots of the characteristic equation

associated with the multistep difference method

If \(|\lambda_i| \le 1\), for each \(i = 1, 2, \dots, m\) and all roots with modulus value \(1\) are simple roots, then the difference method is said to satisfy the root condition.

- Methods that satisfy the root condition and have \(\lambda = 1\) as the only root of the characteristic equation of magnitude one are called strongly stable.

- Methods that satisfy the root condition and have more than one disctinct root with magnitude one are called weakly stable.

- Methods that do not satisfy the root condition are called unstable.

Theorem 5.10

A multistep method of the form

is stable iff it satisfies the root condition. Moreover, if the difference method is consistent with the differential equation, then the method is stable iff it is convergent.

Stiff Equations¶

However, a special type of solution is not easy to deal with, when the magnitude of derivative increases but the solution does not. They are call stiff equations.

Stiff differential eqaution are characterized as those whose exact solution has a term of the form \(e^{-ct}\), where \(c\) is a large positive constant. This is called the transient solution. Actaully there is another important part of solution called steady-state solution.

Let's first define test equation to examine what happen with the stiff cases.

Definition 5.11

A test equation is the IVP

The solution is obviously \(y(t) = \alpha e^{\lambda t}\), which is the transient solution. At this case, the steady-state solution is zero, thus the approximation characteristics of a method are easy to determine.

Example of Euler's Method

Take Euler's method as an example, and denote \(H = h\lambda\). We have

so

The absolute error is

When \(H < 0\), \((e^H)^i\) decays to zero as \(i\) increases. Thus we want \((1 + H)^i\) to decay too, which implies that

From another perspective, consider the roundoff error of the initial value \(\alpha\) by,

At the ith step the roundoff error is

To control the error, we also want \(|1 + H| < 1\).

In general, when applying to the test equation, we have the difference equation of the form

To make \(Q(H)\) approximate \(e^H\), we want at least \(|Q(H)| < 1\) to make it decay to 0. The inequality delimit a region in a complex plane.

For a multistep method, the difference equation is in the form

We can define a characteristic polynomial

Suppose \(\beta_i\) are the distinct roots of \(Q(z, H)\), then it can be proved that \(\exists\ c_i\), s.t.

Again, to make \(w_i\) approximate \((e^H)^i\), we want at least all \(|\beta_k| < 1\) to make it decay to \(0\). It also delimit a region in a complex plane.

Definition 5.12

The Region R of abosolute stability for a one-step method is

and for a multistep method,

- A numerical method is said to be A-stable if its region \(R\) of abosolute stability contains left half-plane, which means \(\text{Re}(\lambda) < 0\), stable with stiff equation.

- Method A is said to be more stable than method B if the region of absolute stability of A is larger than that of B.

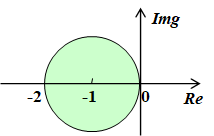

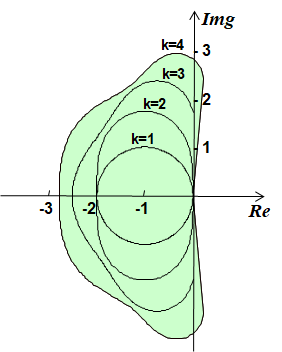

The region of Euler's method is like

Thus it's only stable for some cases of stiff equation. When the negative \(\lambda\) becomes smaller and get out of the region, it becomes not stable.

Similarly, for Runge-Kutta Order Four method (explicit), applying test equation, we have

And the region is like

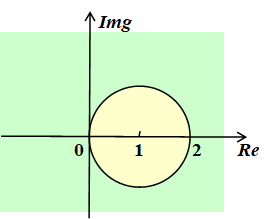

Let's consider some implicit method. Say Euler's implicit method, we have

whose region is

Thus it's A-stable.

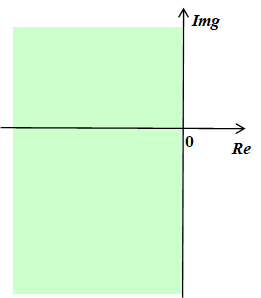

Also, implicit Trapezoidal method and 2nd-order Runge-Kutta implict method are A-stable, both with the difference equation

whose region is just right covers the left half-plane.

Thus an important intuitive is that implicit method is more stable than explicit method in the stiff discussion.

创建日期: 2022.11.10 01:04:43 CST