Chapter 2 | Solution of Equations in One Variable¶

Bisection Method¶

Theorem 2.0

Suppose that \(f \in C[a, b]\) and \(f(a)\cdot f(b) \lt 0\). The Bisection method generates a sequence \(\{p_n\}\) \((n = 0, 1, 2,\dots)\) approximating a zero \(p\) of \(f\) with

Key Points for Algorithm Implementation¶

- \(mid = a + (b - a)\ /\ 2, \text{but not } mid = (a + b)\ /\ 2\), for accuracy and not exceeding the limit of range.

- \(sign(FA)\cdot sign(FM)\gt 0, \text{but not }FA\cdot FM \gt 0\), for saving time.

Pros & Cons¶

Pros

- Simple premise, only requires a continuous \(f\).

- Always converges to a solution.

Cons

- Slow to converge, and a good intermediate approximation can be inadvertently discarded.

- Cannot find multiple roots and complex roots.

Fixed-Point Iteration¶

Theorem12.1 | Fixed-Point Theorem

Let \(g \in C[a,b]\) be such that \(g(x) \in [a,b], \forall x \in [a, b]\). Suppose \(g'\) exists on \((a,b)\) and that a constant \(k\) \((0\lt k \lt 1)\) exists, s.t.

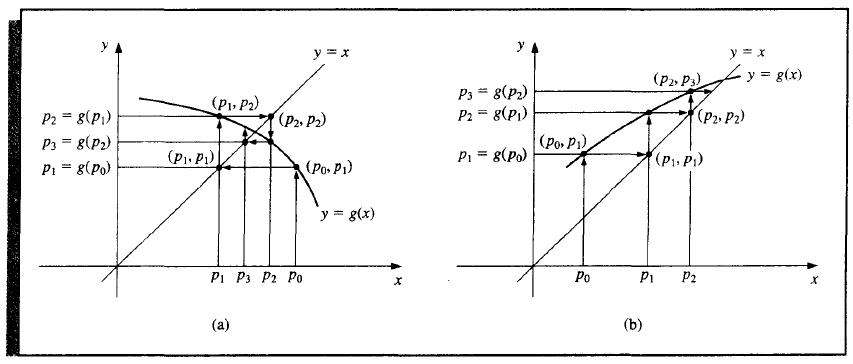

Then \(\forall\ p_0 \in[a,b]\), the sequence \(\{p_n\}\) defined by

converges to the unique fixed-point \(p\in [a,b]\).

.

Corollary 2.2

\(\Rightarrow\) The smaller \(k\) is, the faster it converges.

Newton's Method (Newton-Raphson Method)¶

Newton's method is an improvement of common fixed-point iteration method above.

Theorem 2.3

Let \(f\in C^2[a,b]\) and \(\exists\ p\in[a,b]\), s.t. \(f(p) = 0\) and \(f'(p) \ne 0\), then \(\exists\ \delta \gt 0\), \(\forall\ p_0 \in [p - \epsilon, p + \epsilon]\), s.t. the sequence \(\{p_n\}_{n = 1}^\infty\) defined by

converges to \(p\).

Error Analysis for Iterative Methods¶

Definition 2.0

Suppose \(\{p_n\}\) is a sequence that converges to \(p\), and \(\forall n\), \(p_n \ne p\). If positive constants \(\alpha\) and \(\lambda\) exist with

then \(\{p_n\}\) conveges to \(p\) of order \(\alpha\), with asymptotic error constant \(\lambda\).

Specially,

- If \(\alpha = 1\), the sequence is called linearly convergent.

- If \(\alpha = 2\), the sequence is called quadratically convergent.

Theorem 2.4

The common fixed-point iteration method (\(g'(p) \ne 0\)) with the premise in Fixed-Point Theorem is linearly convergent.

Proof

Theorem 2.5

The Newton's method \((g'(p)=0)\) is at least quadratically convergent.

Proof

More commonly,

Theorem 2.6

Let \(p\) be a fixed point of \(g(x)\). If there exists some constant \(\alpha \ge 2\) such that \(g\in C^\alpha [p-\delta, p+\delta]\), \(g'(p)=\dots=g^{(\alpha-1)}(p)=0\), \(g^{(\alpha)}(p)=0\). Then the iterations with \(p_n = g(p_{n-1})\), \(n \ge 1\), is of order \(\alpha\).

Multiple Roots Situation¶

Notice that from the proof above, Newton's method is quadratically convergent if \(f'(p_n) \ne 0\). If \(f'(p) = 0\), then the equation has multiple roots at \(p\).

If \(f(x) = (x - p)^mq(x)\) and \(q(p)\ne 0\), for Newton's Method,

It is still convegent, but not quadratically.

A way to speed it up

Let

then \(\mu (x)\) has the same root as \(f(x)\) but no multiple roots anymore. And

Newton's method can be used there again.

Pros & Cons¶

Pros

- Quadratic convegence

Cons

- Requires additional calculation of \(f''(x)\)

- The denominator consists of the difference of the two numbers both close to \(0\).

Accelerating Convergence¶

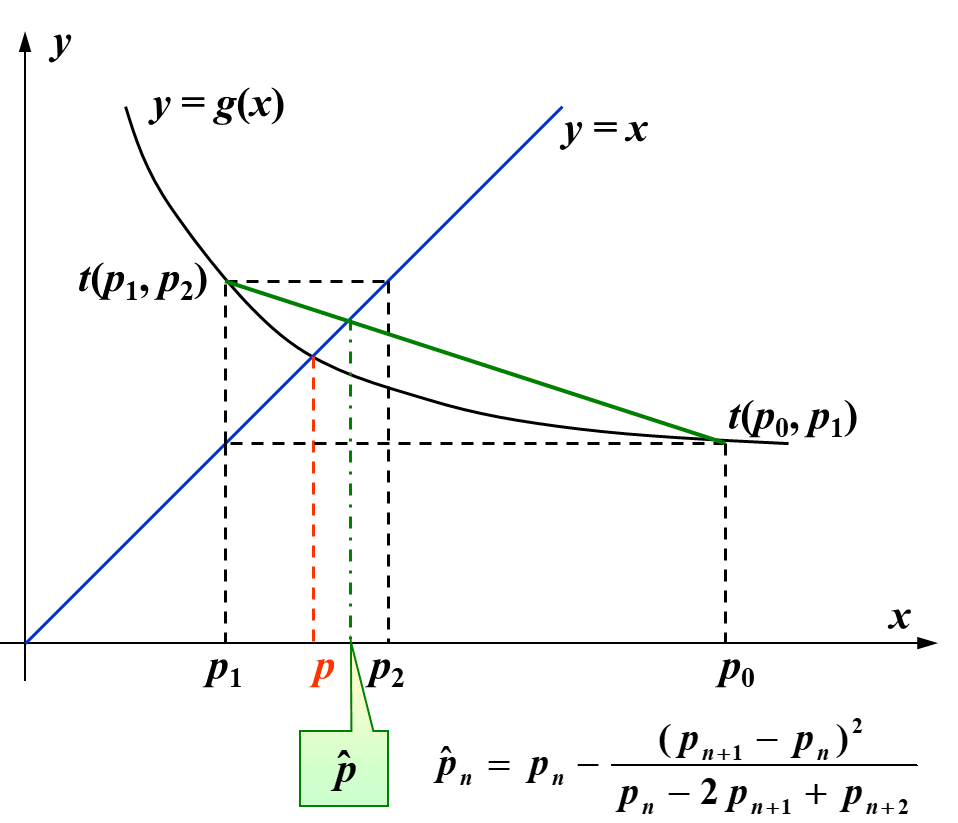

Aitken's \(\Delta^2\) Method¶

Definition 2.1

Forward Difference \(\Delta p_n = p_{n+1} - p_n\). Similarly \(\Delta^kp_n = \Delta(\Delta^{k-1}p_n)\)

Representing Aitken's \(\Delta^2\) Method by forward difference, we have

Theorem 2.7

Suppose that \(\{p_n\}\) is a sequence, \(\lim\limits_{n\rightarrow \infty}p_n = p\), \(\exists N\), s.t. \(\forall\ n > N\), \((p_n - p)(p_{n+1} -p) \gt 0\). Then the sequence \(\{\hat{p_n}\}\) converges to \(p\) faster than \(\{p_n\}\) in the sense that

The algorithm to implement it is called Steffensen’s Acceleration.

创建日期: 2022.12.21 01:34:02 CST