Lecture 5 Image Matching and Motion Estimation¶

Image Matching¶

Abstract

- Dectection

- Description

- Matching

- Learned based matching

1. Detection¶

We want uniqueness.

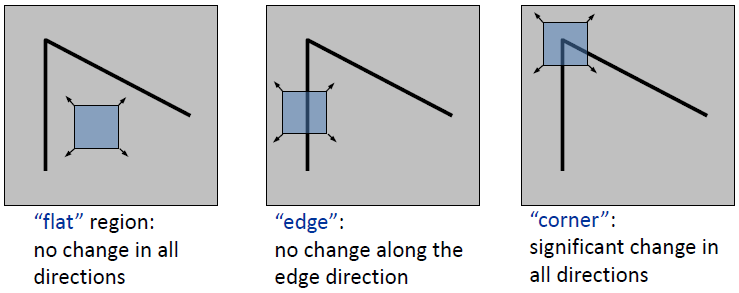

Corner Detection¶

Local measures of uniqueness

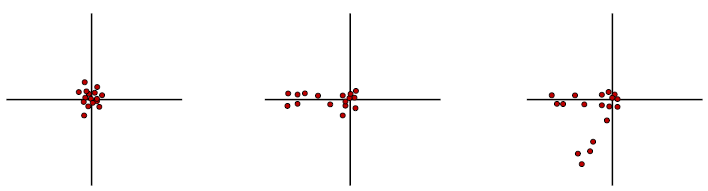

Principal Component Analysis (PCA) 主成分分析

To describe the distribution of gradients by two eigenvectors.

Method of Corner Detection

- Compute the covariance matrix \(H\) at each point and compute its eigenvalues \(\lambda_1\), \(\lambda_2\).

- Classification

- Flat - \(\lambda_1\) and \(lambda_2\) are both small.

- Edge - \(\lambda_1 \gg \lambda_2\) or \(\lambda_1 \ll \lambda_2\).

- Corner - \(\lambda_1\) and \(\lambda_2\) are both large.

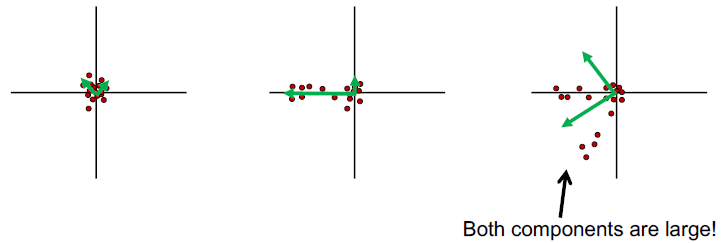

Harris Corner Detector

However, computing eigenvalues are expensive. Instead, we use Harris corner detector to indicate it.

- Compute derivatives at each pixel.

- Compute covariance matrix \(H\) in a Gaussian window around each pixel.

-

Compute corner response function (Harris corner detector) \(f\), given by

\[ f = \frac{\lambda_1\lambda_2}{\lambda_1 + \lambda_2} = \frac{\det(H)}{\text{tr}(H)}. \] -

Threshold \(f\).

- Find local maxima of response function.

Invariance Properties

- Invariant to translation, rotation and intensity shift.

- Not invariant to scaling and intensity scaling.

- How to find the correct scale?

- Try each scale and find the scale of maximum of \(f\).

- How to find the correct scale?

Blob Detection¶

convolution!

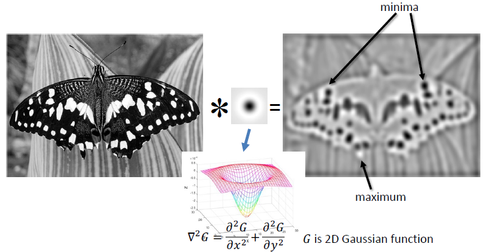

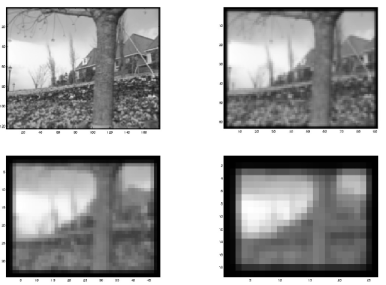

Laplacian of Gaussian (LoG) Filter¶

Example

Laplacian is sensitive to noise. To solve this flaw, we often

- Smooth image with a Gaussian filter

- Compute Laplacian

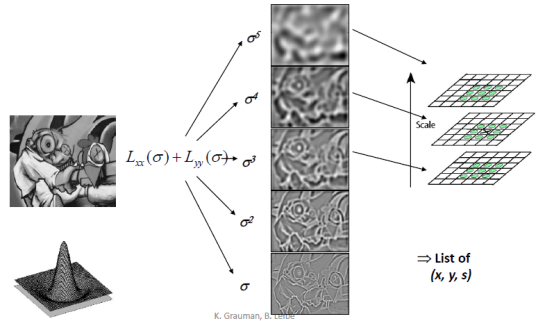

Scale Selection (the same problem as corner detection)¶

The same solution as corner detection - Try and find maximum.

Implementation: Difference of Gaussian (DoG)¶

A way to acclerate computation, since LoG can be approximated by DoG.

2. Description¶

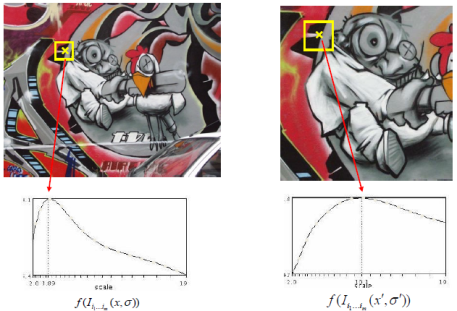

We mainly focus on the SIFT (Scale Invariant Feature Transform) descriptor.

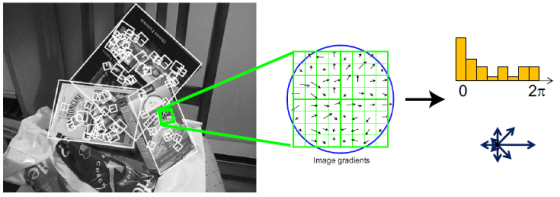

Orientation Normalization¶

- Compute orientation histogram

- Select dominant orientation

- Normalize: rotate to fixed orientation

Properites of SIFT¶

- Extraordinaraily robust matching technique

- handle changes in viewpoint

- Theoretically invariant to scale and rotation

- handle significant changes in illumination

- Fast

- handle changes in viewpoint

Other dectectores and descriptors

- HOG

- SURF

- FAST

- ORB

- Fast Retina Key-point

3. Matching¶

Feature matching¶

- Define distance function to compare two descriptors.

- Test all to find the minimum distance.

Problem: repeated elements

-

To find that the problem happens: Ratio Test

-

Ratio score = \(\frac{||f_1 - f_2||}{||f_1 - f_2'||}\)

-

best match \(f_2\), second best match \(f'_2\).

- Another strategy: Mutual Nearest Neighbor

- \(f_2\) is the nearest neighbour of \(f_1\) in \(I_2\).

- \(f_1\) is the nearest neighbour of \(f_2\) in \(I_1\).

4. Learning based matching¶

Question

Motion Estimation¶

Problems

- Feature-tracking

- Optical flow

Method: Lucas-Kanade Method¶

Assumptions¶

- Small motion

- Brightness constancy

- Spatial coherence

Brightness constancy¶

Aperture Problem¶

Idea: To get more equations → Spatial coherence constraint

Assume the pixel's neighbors have the same motion \([u, v]\).

If we use an \(n\times n\) window,

More equations than variables. Thus we solve \(\min_d||Ad-b||^2\).

Solution is given by \((A^TA)d = A^Tb\), when solvable, which is \(A^TA\) should be invertible and well-conditioned (eigenvalues should not be too small, similarly with criteria for Harris corner detector).

Thus it's easier to estimate the motion of a corner, then edge, then low texture region (flat).

Small Motion Assumption¶

For taylor expansion, we want the motion as small as possbile. But probably not - at least larger than one pixel.

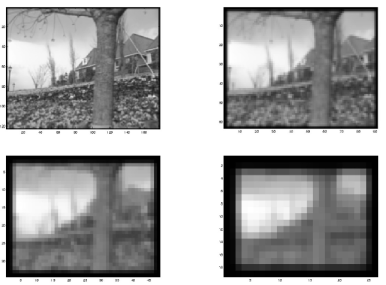

Solution¶

- Reduce the resolution

- Use the image pyramid

创建日期: 2022.11.10 01:04:43 CST